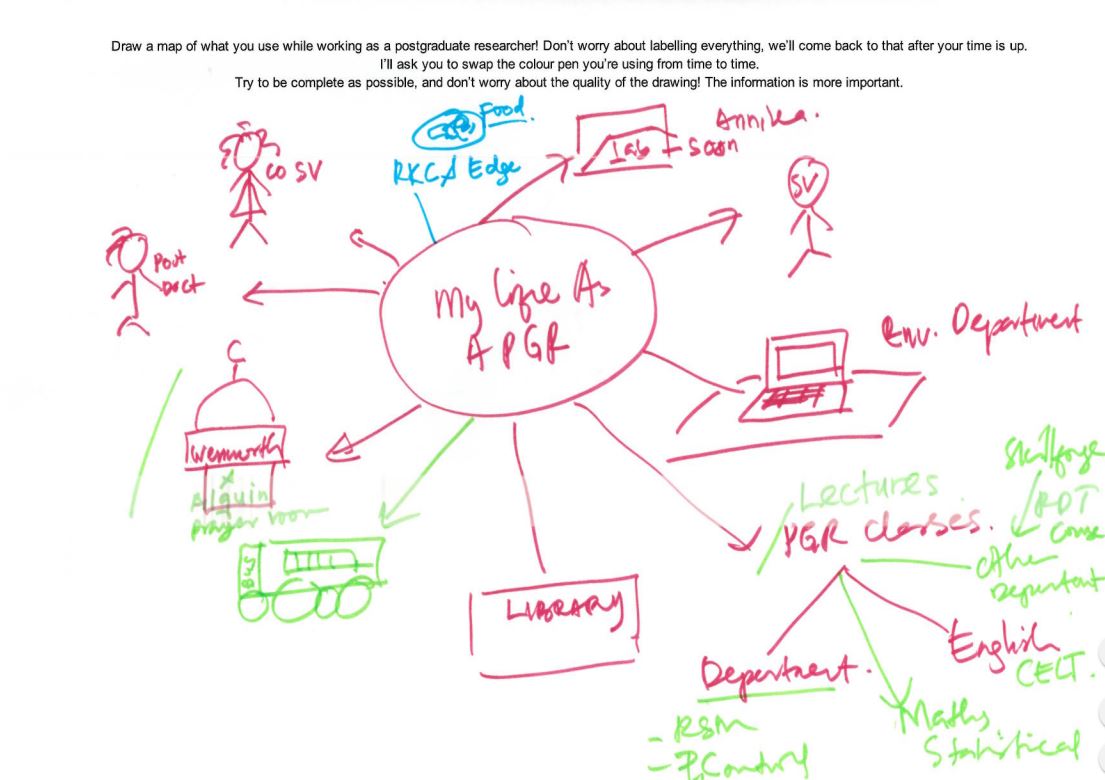

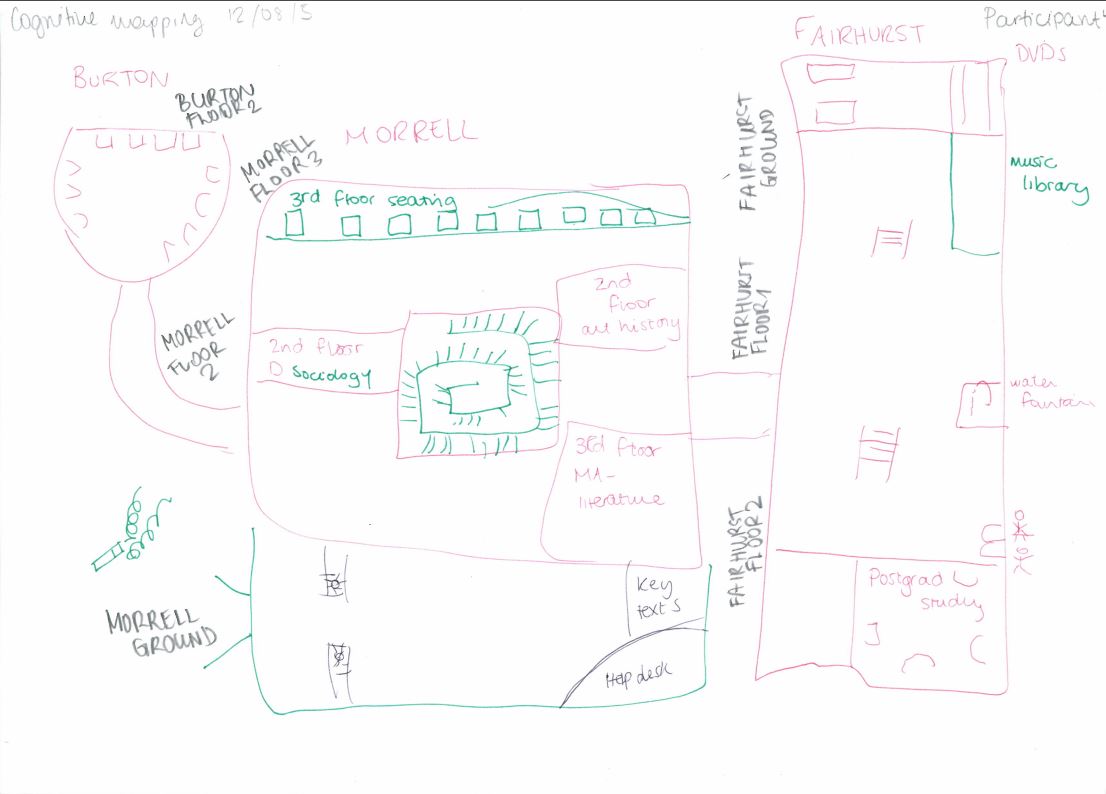

It was a great way of getting the participants to think about the areas I would end up interviewing them on, and the temporal and relational information captured in the map made it easier to pick up on each participant’s thoughts. One good example of this is how a participant placed importance on their desk: they drew it as their map’s central element early on, and branched everything off of that central element. This was reflected in their interview, where they emphasised the importance of that desk to them.

“Non-directed interviews”

Using the participant’s cognitive map as a ‘guide’, I would then conduct a non-directed interview. This involved taking an almost passive, neutral stance in everything I asked about, primarily allowing the concepts brought up on the participant’s map to direct the conversation – then, after those points had been exhausted, I would consult my own discussion guide to cover the rest of the areas of interest.

Conducting the interview in this way was initially difficult for me – it was sometimes hard to probe without being ‘aggressive’ (asking weighted questions or changing the topic), and I sometimes struggled to facilitate the conversation without suggesting topics to talk about.

There was immense value from conducting the session in this way, however. By focusing the interview on the topics participants brought up, gathered information more closely reflected the participant’s “perspective” – their habits, their opinions and their choices, mostly on what they were aware of in the discussed areas. Gathering the information in this way allowed for me to more effectively deliver insight on issues of awareness.

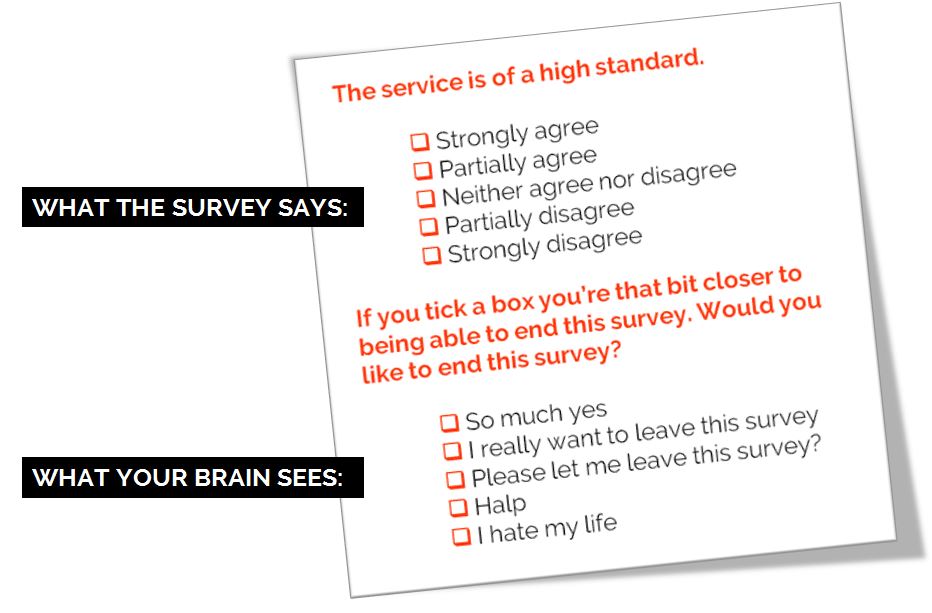

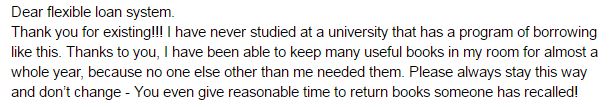

Love/Break Up Letters

Finally, participants were asked to write a ‘love’ or ‘break up’ letter. By asking participants to address this letter to a personified IT or library service, we hoped to draw out the emotions of participants towards those services, and easily establish positive/pain points.

My participants seemed to be very polarised by the exercise; people either really got into it, or they really didn’t. Upon reflection, the abstract nature of the exercise may have made some participants uncomfortable, especially knowing that their letters would be scrutinised. However, while I feel that while this technique didn’t work in a 1 on 1 session, there is merit to trying it out in a pop-up-desk context, or a ‘prize raffle’ format – this would allow for many responses, and for the easy identification of pain points across services.

All in all, I felt that the techniques allowed me to attain some real insight into PGRs, and despite the initial nerves, I really enjoyed conducting these sessions with participants. But while I’m singing the praises of these techniques now, back before I started my internship, my mentality for designing around users (or stakeholders in general) was one of appeasement – design a website that does what stakeholders need it to do, and fix any issues preventing its smooth use. A real ‘checklist’ oriented approach. Historically, I had followed this approach in my degree through a type of observation called ‘usability testing’, where I noted any issues users had when doing tasks that I had set.

So, heading into this internship, I had expected to do just that: more observation, make a list of issues to fix, and suggest some solutions - tick those boxes off, one by one, on the way to a “good UX”. But, throughout my internship, I realised this approach just yields a ‘passable’ user experience – you end up with something that works, but not necessarily something that’s good.

Example of Findings: Lonely Researchers

For example, one of my participants told me something that really struck me: they said that when they were based at a general desk, that they felt disconnected from their department. It was always possible to contact or visit their supervisors, or use the department testing rooms, or go out of their way to interact with their peers, but not being based alongside all of that meant that they felt ‘distant’ with their department. This changed when they were offered a desk inside their department. Besides improvements on all of those fronts, they reported feeling ‘valued’ as a member of the university because of it.

The importance of ‘department community’ - being alongside your researcher peers and supervisor so that interaction is readily possible – was prominent in my discussion with some participants. During my research, I found that while non-department PGR study spaces covered various noise levels (something participants valued), those spaces did not facilitate this kind of ‘natural interaction’ that only happens when PGRs and supervisors are all based together – and so PGRs based outside their department missed out on this.

My approach of “observation to find issues, fix issues” would not have yielded this type of insight – I would have thought along the lines of “they don’t really like the silence in this building”, suggested to change the noise level policy and called it a day. It wouldn’t have made much headway in creating a better UX for the people based outside their departments.

But, it finally dawned on me during my time with the library team at York: good UX necessitates understanding what your user values, what is important to them, and actively working with that in mind. Which worked out for me, in the end: UX is a more satisfying when it isn’t just making something that works and ticking boxes.